ROS 2 Software Stack for Autonomous Navigation

This core software lesson guides students through building the "brain" of an autonomous mobile robot (AMR) using the ROS 2 framework. Students implement key autonomy modules such as localization, mapping, and path planning using open-source ROS 2 packages. The final result is a fully integrated navigation system capable of moving through unknown environments safely and intelligently.

Core Concepts

- Configuring and tuning the Navigation2 (Nav2) stack

- Implementing odometry, TF tree broadcasting, and localization with AMCL

- Performing mapping and SLAM using tools like

slam_toolboxorcartographer - Setting up sensor drivers (e.g., LIDAR, IMU, encoders) and robot model (URDF/Xacro)

- Developing and launching ROS 2 nodes in Python or C++

- Performing simulation testing in Gazebo and RViz

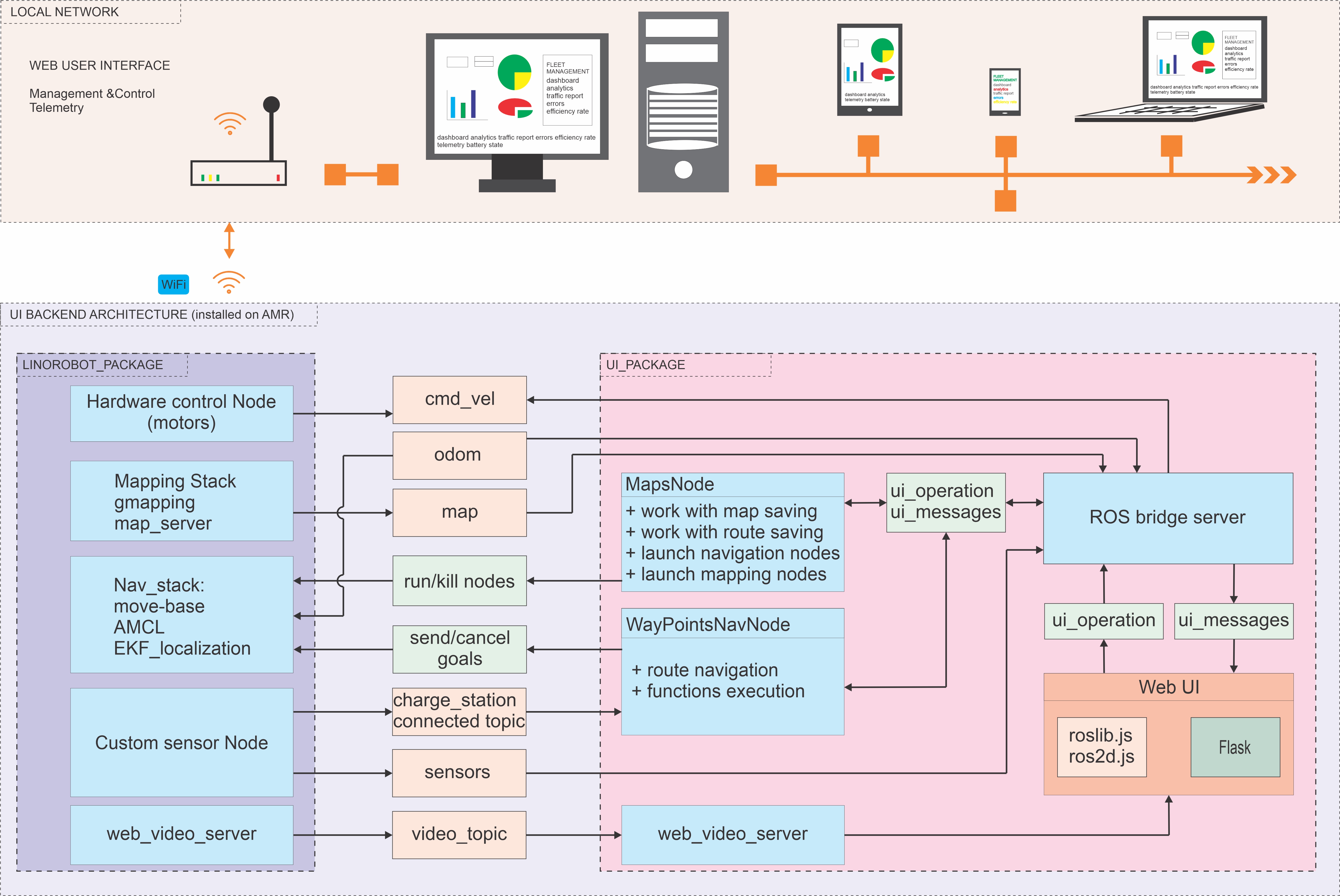

System Overview

- The robot’s physical model is described using URDF or Xacro, including joints, wheels, and sensors.

- Sensor drivers are launched to stream real-time data from LIDARs, IMUs, and wheel encoders.

- Odometry and TF frames are published, forming the basis of robot localization.

- The AMCL node is used for localization against a map, or SLAM is used for building maps dynamically.

- The Nav2 stack plans paths, avoids obstacles, and sends velocity commands to the base controller.

- Simulation and visualization are conducted using Gazebo and RViz, with testing routines and tuning cycles.

Learning Outcomes

- Set up and configure a modular ROS 2 software stack

- Develop and launch ROS 2 nodes in both Python and C++

- Build real-time localization and mapping pipelines

- Understand and manipulate the TF2 tree and ROS message types

- Integrate and test robot software in simulated environments

- Gain practical skills in the ROS 2 development workflow

Optional Extensions

- Replace AMCL with EKF-based localization using robot_localization package

- Use Nav2 Behavior Tree customization to define custom navigation logic

- Add multi-robot simulation scenarios in Gazebo

- Deploy and benchmark on a physical robot for real-world tuning

- Create a teleoperation or diagnostic GUI for remote mission support